Because the researchers analyzed how college students accomplished their work on computer systems, they seen that college students who had entry to AI or a human have been much less more likely to consult with the studying supplies. These two teams revised their essays primarily by interacting with ChatGPT or chatting with the human. These with solely the guidelines spent probably the most time wanting over their essays.

The AI group spent much less time evaluating their essays and ensuring they understood what the project was asking them to do. The AI group was additionally susceptible to copying and pasting textual content that the bot had generated, regardless that researchers had prompted the bot to not write immediately for the scholars. (It was apparently simple for the scholars to bypass this guardrail, even within the managed laboratory.) Researchers mapped out all of the cognitive processes concerned in writing and noticed that the AI college students have been most centered on interacting with ChatGPT.

“This highlights an important situation in human-AI interplay,” the researchers wrote. “Potential metacognitive laziness.” By that, they imply a dependence on AI help, offloading thought processes to the bot and never partaking immediately with the duties which might be wanted to synthesize, analyze and clarify.

“Learners may turn out to be overly reliant on ChatGPT, utilizing it to simply full particular studying duties with out absolutely partaking within the studying,” the authors wrote.

The second research, by Anthropic, was launched in April through the ASU+GSV schooling investor convention in San Diego. On this research, in-house researchers at Anthropic studied how college college students really work together with its AI bot, known as Claude, a competitor to ChatGPT. That methodology is a giant enchancment over surveys of scholars who could not precisely bear in mind precisely how they used AI.

Researchers started by amassing all of the conversations over an 18-day interval with individuals who had created Claude accounts utilizing their college addresses. (The outline of the research says that the conversations have been anonymized to guard pupil privateness.) Then, researchers filtered these conversations for indicators that the individual was more likely to be a pupil, in search of assist with teachers, faculty work, learning, studying a brand new idea or tutorial analysis. Researchers ended up with 574,740 conversations to research.

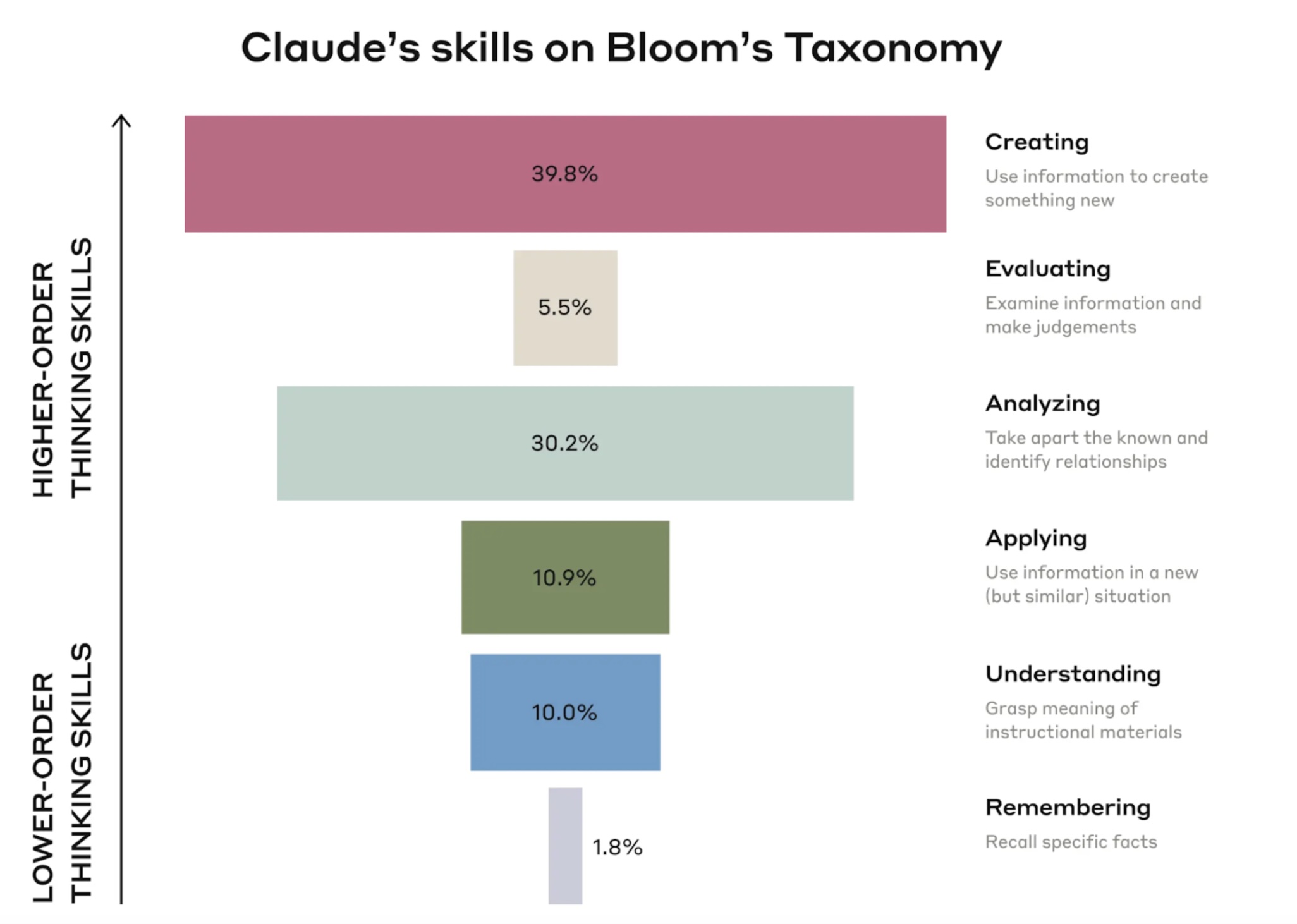

The outcomes? College students primarily used Claude for creating issues (40 % of the conversations), resembling making a coding undertaking, and analyzing (30 % of the conversations), resembling analyzing authorized ideas.

Creating and analyzing are the preferred duties college college students ask Claude to do for them

Anthropic’s researchers famous that these have been higher-order cognitive features, not primary ones, in keeping with a hierarchy of expertise, often called Bloom’s Taxonomy.

“This raises questions on guaranteeing college students don’t offload important cognitive duties to AI techniques,” the Anthropic researchers wrote. “There are respectable worries that AI techniques could present a crutch for college students, stifling the event of foundational expertise wanted to help higher-order pondering.”

Anthropic’s researchers additionally seen that college students have been asking Claude for direct solutions virtually half the time with minimal back-and-forth engagement. Researchers described how even when college students have been partaking collaboratively with Claude, the conversations may not be serving to college students be taught extra. For instance, a pupil would ask Claude to “resolve likelihood and statistics homework issues with explanations.” Which may spark “a number of conversational turns between AI and the scholar, however nonetheless offloads important pondering to the AI,” the researchers wrote.

Anthropic was hesitant to say it noticed direct proof of dishonest. Researchers wrote about an instance of scholars asking for direct solutions to multiple-choice questions, however Anthropic had no means of figuring out if it was a take-home examination or a apply take a look at. The researchers additionally discovered examples of scholars asking Claude to rewrite texts to keep away from plagiarism detection.

The hope is that AI can enhance studying by means of speedy suggestions and personalizing instruction for every pupil. However these research are exhibiting that AI can also be making it simpler for college students not to be taught.

AI advocates say that educators want to revamp assignments in order that college students can’t full them by asking AI to do it for them and educate college students on use AI in ways in which maximize studying. To me, this looks as if wishful pondering. Actual studying is difficult, and if there are shortcuts, it’s human nature to take them.

Elizabeth Wardle, director of the Howe Heart for Writing Excellence at Miami College, is fearful each about writing and about human creativity.

“Writing will not be correctness or avoiding error,” she posted on LinkedIn. “Writing is not only a product. The act of writing is a type of pondering and studying.”

Wardle cautioned concerning the long-term results of an excessive amount of reliance on AI, “When folks use AI for every little thing, they aren’t pondering or studying,” she stated. “After which what? Who will construct, create, and invent once we simply depend on AI to do every little thing?

It’s a warning all of us ought to heed.